Multi-Step Adaptive Elastic-Net

Usage

msaenet(

x,

y,

family = c("gaussian", "binomial", "poisson", "cox"),

init = c("enet", "ridge"),

alphas = seq(0.05, 0.95, 0.05),

tune = c("cv", "ebic", "bic", "aic"),

nfolds = 5L,

rule = c("lambda.min", "lambda.1se"),

ebic.gamma = 1,

nsteps = 2L,

tune.nsteps = c("max", "ebic", "bic", "aic"),

ebic.gamma.nsteps = 1,

scale = 1,

lower.limits = -Inf,

upper.limits = Inf,

penalty.factor.init = rep(1, ncol(x)),

seed = 1001,

parallel = FALSE,

verbose = FALSE

)Arguments

- x

Data matrix.

- y

Response vector if

familyis"gaussian","binomial", or"poisson". Iffamilyis"cox", a response matrix created bySurv.- family

Model family, can be

"gaussian","binomial","poisson", or"cox".- init

Type of the penalty used in the initial estimation step. Can be

"enet"or"ridge". Seeglmnetfor details.- alphas

Vector of candidate

alphas to use incv.glmnet.- tune

Parameter tuning method for each estimation step. Possible options are

"cv","ebic","bic", and"aic". Default is"cv".- nfolds

Fold numbers of cross-validation when

tune = "cv".- rule

Lambda selection criterion when

tune = "cv", can be"lambda.min"or"lambda.1se". Seecv.glmnetfor details.- ebic.gamma

Parameter for Extended BIC penalizing size of the model space when

tune = "ebic", default is1. For details, see Chen and Chen (2008).- nsteps

Maximum number of adaptive estimation steps. At least

2, assuming adaptive elastic-net has only one adaptive estimation step.- tune.nsteps

Optimal step number selection method (aggregate the optimal model from the each step and compare). Options include

"max"(select the final-step model directly), or compare these models using"ebic","bic", or"aic". Default is"max".- ebic.gamma.nsteps

Parameter for Extended BIC penalizing size of the model space when

tune.nsteps = "ebic", default is1.- scale

Scaling factor for adaptive weights:

weights = coefficients^(-scale).- lower.limits

Lower limits for coefficients. Default is

-Inf. For details, seeglmnet.- upper.limits

Upper limits for coefficients. Default is

Inf. For details, seeglmnet.- penalty.factor.init

The multiplicative factor for the penalty applied to each coefficient in the initial estimation step. This is useful for incorporating prior information about variable weights, for example, emphasizing specific clinical variables. To make certain variables more likely to be selected, assign a smaller value. Default is

rep(1, ncol(x)).- seed

Random seed for cross-validation fold division.

- parallel

Logical. Enable parallel parameter tuning or not, default is

FALSE. To enable parallel tuning, load thedoParallelpackage and runregisterDoParallel()with the number of CPU cores before calling this function.- verbose

Should we print out the estimation progress?

References

Nan Xiao and Qing-Song Xu. (2015). Multi-step adaptive elastic-net: reducing false positives in high-dimensional variable selection. Journal of Statistical Computation and Simulation 85(18), 3755–3765.

Author

Nan Xiao <https://nanx.me>

Examples

dat <- msaenet.sim.gaussian(

n = 150, p = 500, rho = 0.6,

coef = rep(1, 5), snr = 2, p.train = 0.7,

seed = 1001

)

msaenet.fit <- msaenet(

dat$x.tr, dat$y.tr,

alphas = seq(0.2, 0.8, 0.2),

nsteps = 3L, seed = 1003

)

print(msaenet.fit)

#> Call: msaenet(x = dat$x.tr, y = dat$y.tr, alphas = seq(0.2, 0.8, 0.2),

#> nsteps = 3L, seed = 1003)

#> Df %Dev Lambda

#> 1 8 0.797121 1.219202e+15

msaenet.nzv(msaenet.fit)

#> [1] 2 4 5 35 114 269 363 379

msaenet.fp(msaenet.fit, 1:5)

#> [1] 5

msaenet.tp(msaenet.fit, 1:5)

#> [1] 3

msaenet.pred <- predict(msaenet.fit, dat$x.te)

msaenet.rmse(dat$y.te, msaenet.pred)

#> [1] 2.839212

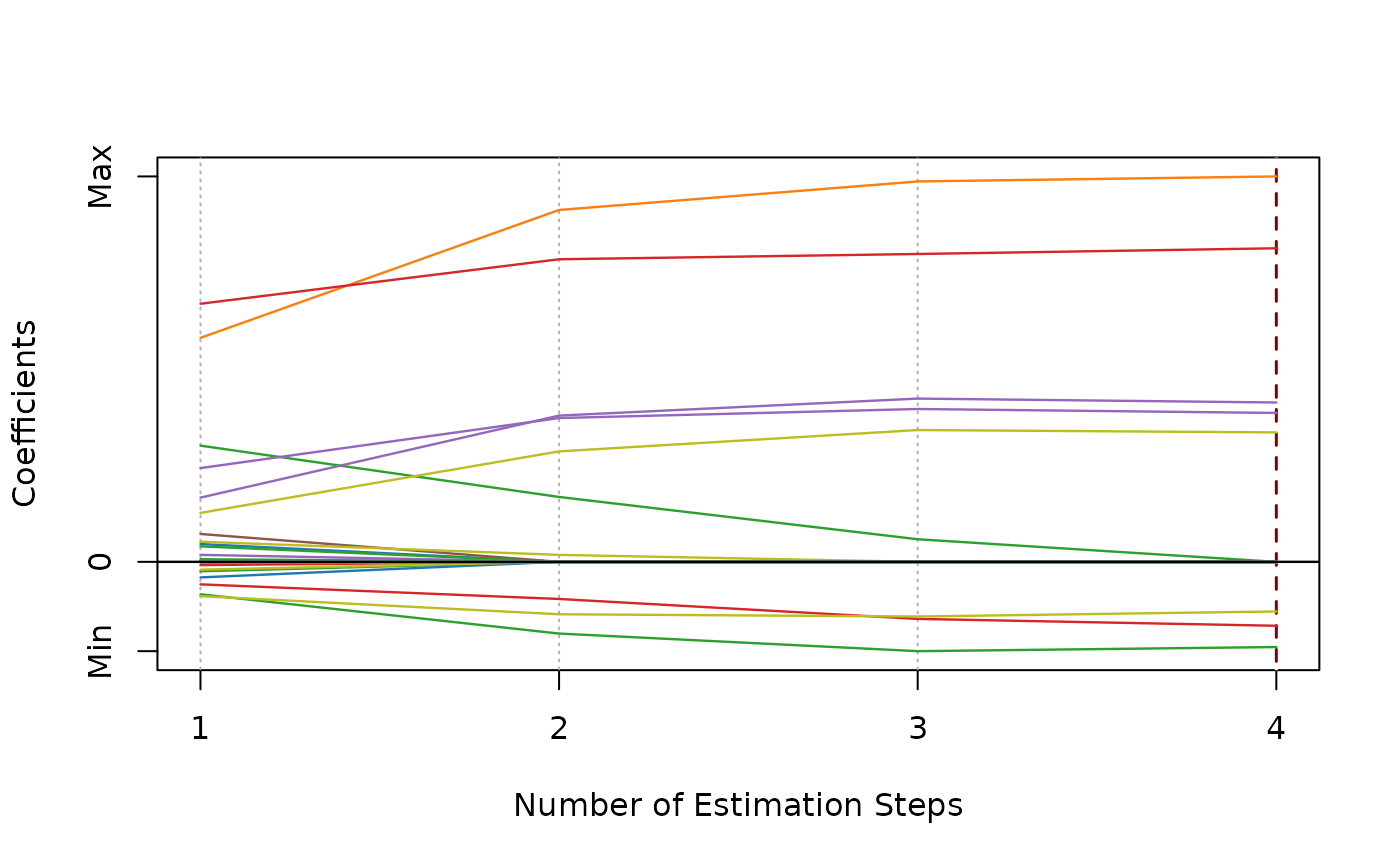

plot(msaenet.fit)