Five years ago, GNOME 3’s immaturity was one of the reasons for me to switch to a Mac. After finished watching two seasons of Mr. Robot, I somehow missed it. With my AWS/GCP bills going up quite a bit recently, I quickly decided to invest a little and build a PC to retry the Linux desktop environment, also, to tackle some personal machine learning projects.

Hardware

Here is my PCPartPicker part list for the parts of choice. It mostly comes from a blog post only with slight modifications that fit my needs (such as the 32 GB memory). Do a random search, and you will be able to find such posts with recommended parts — they will be good starting points. We will also know what are the popular choices on the market in general and be able to avoid glaring mistakes.

This is all the parts needed.

Hardware Notes

This will be a machine pretty much built around the GPU, which costs about half of the price. Therefore our goal here is to optimize around the GPU. Some personal notes:

- PSU. Purchase a decent power supply – it needs to be reliable for 24x7 training.

- CPU. Doesn’t need to be a fancy one like i7-8700. Shouldn’t be too low-end either so it won’t be an accidental bottleneck. Plus sometimes we do train models on CPU.

- RAM. As much as we could get. Can leave some upgrade potential there.

- GPU. Buy brand new GPUs from reliable sellers. We don’t know what really happened to the used video cards these days… Sometimes they were used to mine cryptocurrencies intensively, and their lifespan could be severely affected.

- Number of GPUs. One is ok for a starter. If you know for sure that you need to scale to 2 or 4 cards in the future, choose a motherboard that supports 4-way SLI, a larger chassis, and of course, a 1600W power supply. Parallelization across multiple cards can be non-trivial though for specific things, such as cross-card synchronized batch normalization.

Let’s Build It Up

It took me about four hours to build it up, nothing too challenging. In principle, all we need to do is connecting things like this: power supply <> wires <> motherboard <> wires/slots <> CPU/RAM/GPU/SSD. For overall steps, here is a video tutorial on how to build a PC in 2018. Read the manual or search for videos about specific parts when confused.

Finished build. Serviceable cable management.

Software

For the Linux distribution, I personally like Arch Linux since AUR is so good (think Homebrew on Linux but can install anything with easy user contributions). While for this machine I don’t want to use too much time on upgrading the bleeding edge kernels — it just needs to work. Thus a stock Ubuntu 18.04 LTS was spinelessly installed. It’s a good thing that Canonical finally dropped Unity and embraced the native GNOME Shell in 18.04.

To set up the environment for deep learning, people generally recommend installing certain versions of software since the latest versions may not work properly. I followed this post to install the GPU driver, and this post for CUDA and cuDNN. For Tensorflow, simply follow the official installation guide. It should be done in 20 minutes if everything goes well.

I’m just surprised that after all these years Nvidia’s proprietary GPU driver and computing SDK are still tricky to play with, and developers have to be locked-in by old versions.

Test Flight

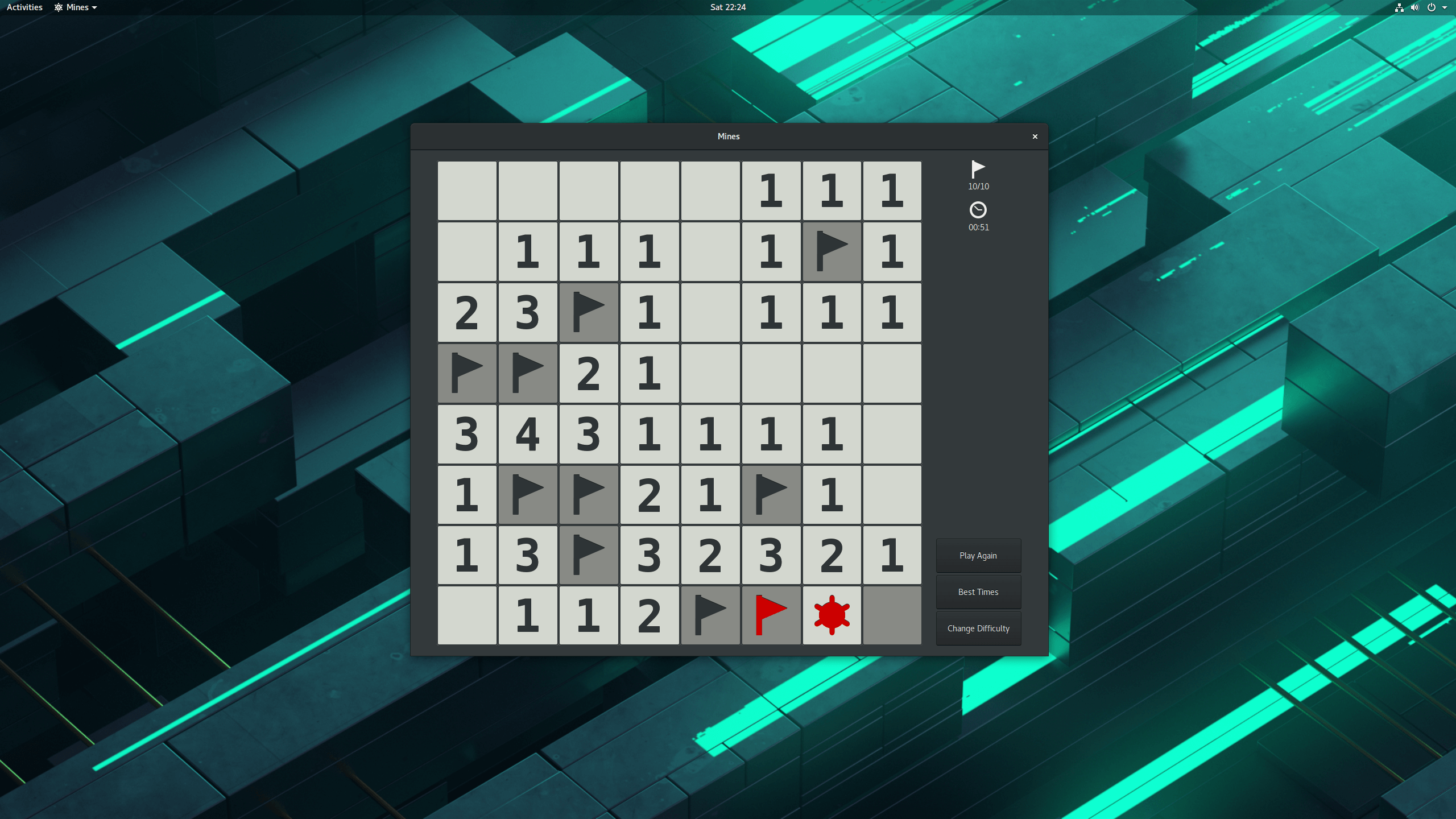

First of all, myth busted: the 1080 Ti can run minesweeper effortlessly.

Running GNOME Mines smoothly. The machine did restart itself once for no obvious reasons after the proprietary GPU driver was installed.

Back to the topic… Here is some R code for fitting a “wide and deep” classification model with Tensorflow and Tensorflow Estimators API. The model is fundamentally a direct combination of a linear model and a DNN model. The synthetic data has 1 million observations, 100 features (20 being useful) and is generated by my R package msaenet.

The training takes about 60 seconds on the GPU and 50 seconds on the CPU with identical results. For this particular case, the CPU is not bad at all! GPU should have a significant edge for training more complex neural nets for vision or language tasks though.

On model performance, the 83% AUC is easily 5% to 10% higher than well-tuned sparse linear models. I guess the message here is that if we want to trade some of the full interpretability of a sparse linear model for some predictive performance, this model is a feasible choice — just with some additional parameters tuning.

Here is the GitHub repo for the R code.